Chang Zou

Yingcai Honors College, University of Electronic Science and Technology of China shenyizou@outlook.com

Decoding nature, shaping futures.

Chengdu, Sichuan, China

I am Zou Chang, an undergraduate student at the Yingcai Honors College, University of Electronic Science and Technology of China (UESTC), majoring in Artificial Intelligence under the Fundamental Science Program in Mathematics and Physics (Class of 2022). I expect to receive my Bachelor’s degree in 2026.

Currently, I am an intern at the EPIC-Lab, led by Professor Linfeng Zhang, in the School of Artificial Intelligence at Shanghai Jiao Tong University. My primary research interests include, but are not limited to, accelerating image and video generation models.

I also have a strong interest in areas such as Large Language Models (LLMs) and Multimodal Large Language Models (MLLMs). I welcome opportunities for collaboration and discussion!

news

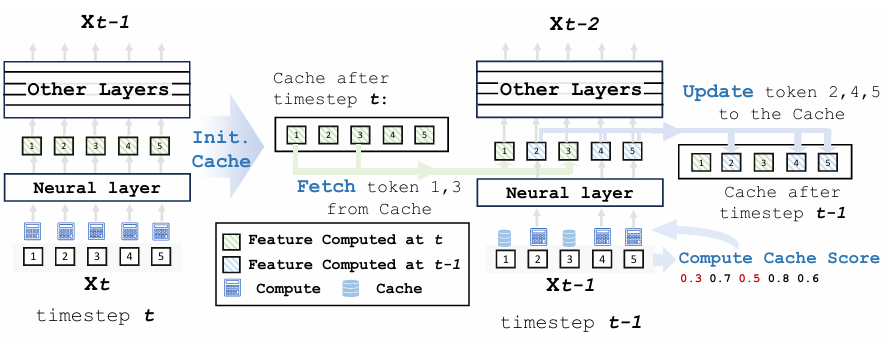

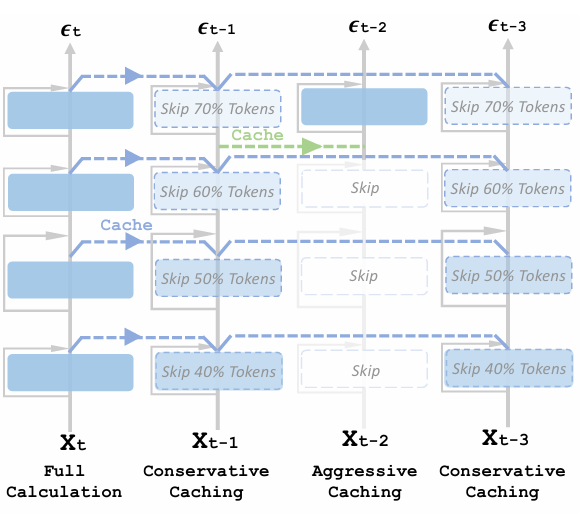

| Dec 29, 2024 | 🚀🚀 We release our work DuCa about accelerating diffusion transformers for FREE, which achieves nearly lossless acceleration of 2.50× on OpenSora! 🎉 DuCa also overcomes the limitation of ToCa by fully supporting FlashAttention, enabling broader compatibility and efficiency improvements. |

|---|---|

| Oct 12, 2024 | 🚀🚀 We release our work ToCa about accelerating diffusion transformers for FREE, which achieves nearly lossless acceleration of 2.36× on OpenSora! |

| Nov 07, 2015 | A long announcement with details-(no information, just a placeholder) |

latest posts

| Dec 04, 2024 | a post with image galleries |

|---|---|

| May 14, 2024 | Google Gemini updates: Flash 1.5, Gemma 2 and Project Astra |

| May 01, 2024 | a post with tabs |